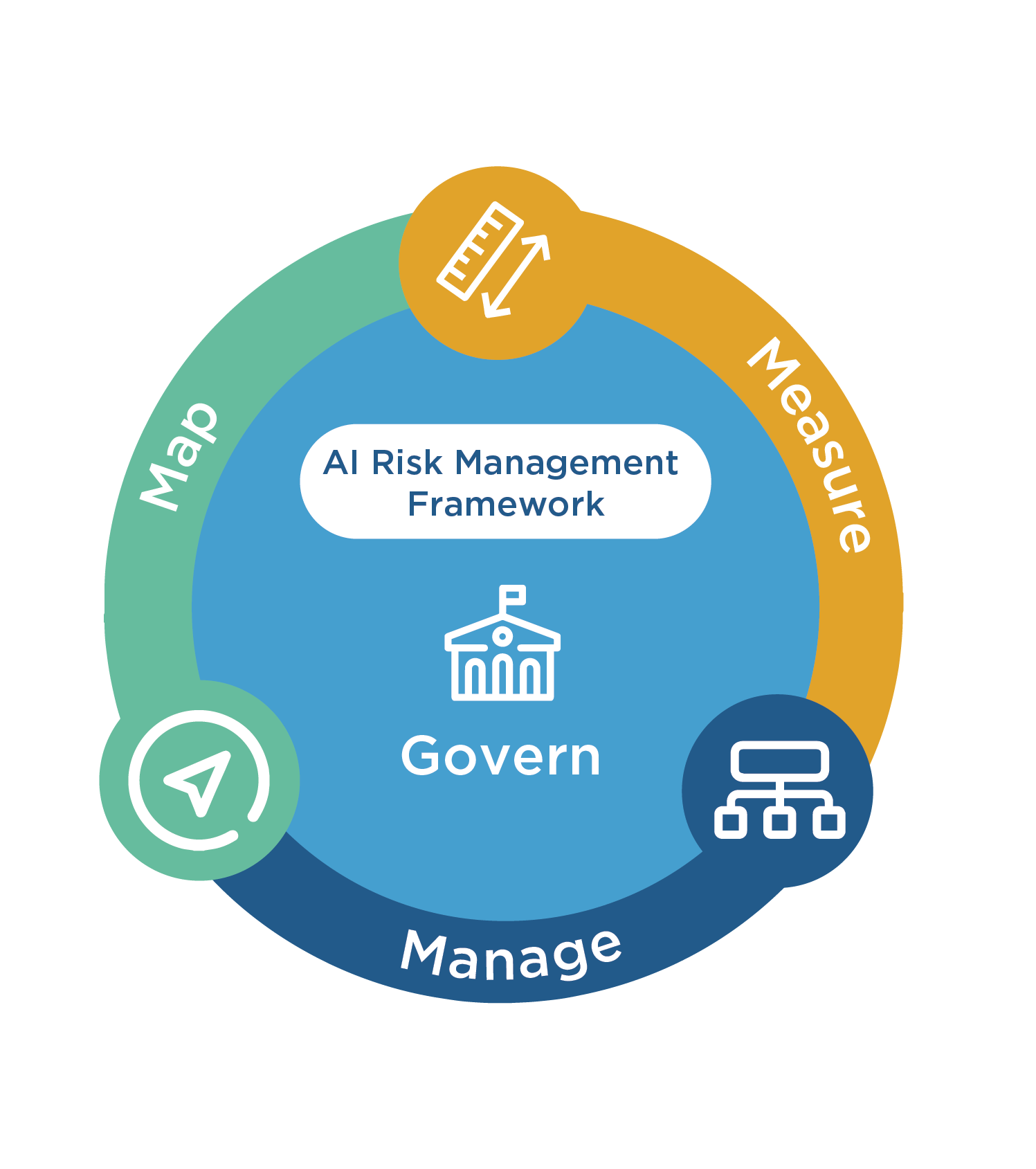

On January 26, 2023, the National Institute of Standards and Technology (NIST) released the first version of the Artificial Intelligence Risk Management Framework (AI RMF).[1] The AI RMF is a voluntary resource meant to help organizations “manage the many risks of AI and promote trustworthy and responsible development and use of AI systems.”[2]

To support the goal of the AI RMF, NIST supplemented its release with a companion NIST AI RMF Playbook,[3] AI RMF Explainer Video,[4] an AI RMF Roadmap,[5] AI RMF Crosswalk,[6] and statements from organization and individuals interested in the success of the AI RMF.[7] Together, these resources provide organizations with a comprehensive toolbox for identifying and managing AI risks.

Given the growing regulatory interest in scrutinizing AI, these resources — although voluntary to use — provide important insights into what regulators may or may not want to see in AI products, services, and systems.

Background

The US Federal Government has long recognized the need for AI regulation. In 2016, the National Science and Technology Council produced a report stating that “the approach to regulation of AI-enabled products to protect public safety should be informed by assessment of the aspects of risk.”[8] In 2018, President Donald Trump signed a law establishing the National Security Commission on Artificial Intelligence to consider how to defend against AI threats and promote AI innovation.[9]

In 2019, following Executive Order 13859,[10] the White House’s Office of Science and Technology Policy released guidance detailing the ten principles that Federal agencies should consider when determining how to regulate AI.[11] In response, NIST released a position paper, which called for US agencies to create globally relevant, non-discriminatory AI standards.

Recognizing that AI has the potential to transform every sector of the US economy and society, Congress passed the National AI Initiative Act of 2020, which established the National Artificial Intelligence Initiative (NAIA), and directed NIST to “develop voluntary standards for artificial intelligence systems.”[12]

On July 29, 2021, NIST issued a Request for Information to Help Develop an AI Risk Management Framework,[13] in which NIST asked individuals, groups, and organizations to submit comment on the goals of the AI RMF and on how those goals should be achieved. On October 15, 2021, NIST published a summary analysis of those comments,[14] and on December 13, 2021, the agency published a concept paper incorporating input from the initial Request for Information.[15]

NIST released a draft AI RMF on March 17, 2022,[16] but, based on comments received during a NIST workshop held that same month,[17] the agency released a modified second draft on August 18, 2022,[18] and held another workshop in October 2022.[19]

Four months later, NIST released the first version of the AI RMF.

Seven characteristics of trustworthy AI

As a flexible framework designed to adapt to a wide range of systems, products, and organizations, the AI RMF does not prescribe specific technical requirements that must be satisfied before an AI is considered trustworthy. Instead, the AI RMF provides a list of characteristics that must be balanced “based on the AI system’s context of use.”[20]

These characteristics are:

- Valid and reliable

- Valid: Confirmation, though the provision of objective evidence, that the requirements for the AI’s specific intended use or application have been fulfilled. (ISO 9000:2015.)

- Reliable: The ability of AI system to perform as required, without failure, for a given time interval, under given conditions, including the entire lifetime of the system. (ISO/IEC TS 5723:2022.)

- Accurate: The closeness of the AI system’s results of observations, computations, or estimates to the true values or the values accepted as being true. (ISO/IEC TS 5723:2022.)

- Robust / Generalized: The ability of an AI system to maintain its level of performance under a variety of circumstances, which includes performing in ways that minimize potential harm to people if it is operating in an unexpecting setting. (ISO/IEC TS 5723:2022.)

- Safe

- AI systems should not, under defined conditions, lead to a state in which human life, health, property, or the environment is endangered. (ISO/IEC TS 5723:2022.)

- Secure and resilient

- Secure: AI systems should maintain confidentiality, integrity, and availability through protection mechanisms that prevent unauthorized access and use. (NIST Cybersecurity Framework and Risk Management Framework.)

- Resilient: AI systems, as well as the ecosystems in which they are deployed, should withstand unexpected adverse events or unexpected changes in their environment or use — or if they can maintain their functions and structure in the face of internal and external change and degrade safely and gracefully when this is necessary. (ISO/IEC TS 5723:2022.)

- Accountable and transparent

- Transparent: Information about an AI system and its outputs should be available to individuals interacting with such a system, regardless of whether they are even aware that they are doing so, and be tailored to the role or knowledge of AI actors or individuals interacting with or using the AI system.

- Accountable: AI systems should incorporate actionable redressability related to AI system outputs that are incorrect or otherwise lead to negative impacts.

- Explainable and interpretable

- Explainable: The AI system should describe how the AI system functions, with descriptions tailored to individual differences such as the user’s role, knowledge, and skill level.

- Interpretable: The AI system should communicate a description of why an AI system made a particular prediction or recommendation. (“Four Principles of Explainable Artificial Intelligence” and “Psychological Foundations of Explainability and Interpretability in Artificial Intelligence.”[21])

- Privacy-enhanced

- Privacy values such as anonymity, confidentiality, and control should guide choices for AI system design, development, and deployment, but privacy-enhancing technologies (PETs) may be needed to support privacy-enhanced AI design.

- Fair — with harmful bias managed

- Fair: AI systems should incorporate equality and equity by addressing issues such as harmful bias and discrimination, which includes taking into consideration cultural context and demographic differences.

- With harmful bias managed: AI systems should consider and manage three major categories of AI bias:

- Systemic: Bias found in the AI datasets, the organizational norms, practices, and processes across the AI lifecycle, and the broader society that uses the AI system.

- Computational and statistical: Bias found in AI datasets and algorithmic processes, and often stems from systematic errors due to non-representative samples.

- Human-cognitive: Bias relating to how an individual or group perceives AI system information to make a decision or fill in missing information, or how humans think about purposes and functions of an AI system.

Practical benefit of complying with the AI RMF

As a voluntary framework, the AI RMF does not mandate compliance with its principles; however, as the NIST Cybersecurity Framework demonstrates, voluntary compliance may help shield an organization from legal risks.

The NIST Cybersecurity Framework offers a risk-based approach to cybersecurity and a methodology for developing a comprehensive information security program. Like the AI RMF, the Cybersecurity Framework is voluntary, and therefore does not form the basis for any regulatory action.

Yet, if a cybersecurity incident occurs, an organization that has implemented the Cybersecurity Framework can use their adherence in their favor. For example, if a regulator alleges the organization was negligent in its cybersecurity practices, the organization can rebut the allegations by demonstrating that its program was designed in accordance with the Cybersecurity Framework and therefore was reasonably designed to counter foreseeable risks.

Compliance with the AI RMF may produce similar benefits, given that NIST created the AI RMF for AI industry stakeholders to “cultivate trust in the design, development, use, and evaluation of AI technologies and systems in ways that enhance economic security and improve qualify of life.”[22]

[1] https://www.nist.gov/itl/ai-risk-management-framework

[2] https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

[3] https://pages.nist.gov/AIRMF/

[4] https://www.nist.gov/video/introduction-nist-ai-risk-management-framework-ai-rmf-10-explainer-video

[5] https://www.nist.gov/itl/ai-risk-management-framework/roadmap-nist-artificial-intelligence-risk-management-framework-ai

[6] https://www.nist.gov/itl/ai-risk-management-framework/crosswalks-nist-artificial-intelligence-risk-management-framework

[7] https://www.nist.gov/itl/ai-risk-management-framework/perspectives-about-nist-artificial-intelligence-risk-management

[8] https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf

[9] https://www.govinfo.gov/content/pkg/COMPS-15483/uslm/COMPS-15483.xml; https://www.nscai.gov/

[10] https://trumpwhitehouse.archives.gov/presidential-actions/executive-order-maintaining-american-leadership-artificial-intelligence/

[11] https://www.whitehouse.gov/wp-content/uploads/2020/01/Draft-OMB-Memo-on-Regulation-of-AI-1-7-19.pdf

[12] https://www.congress.gov/bill/116th-congress/house-bill/6216

[13] https://www.federalregister.gov/documents/2021/07/29/2021-16176/artificial-intelligence-risk-management-framework

[14] https://www.nist.gov/system/files/documents/2021/10/15/AI%20RMF_RFI%20Summary%20Report.pdf

[15] https://www.nist.gov/system/files/documents/2021/12/14/AI%20RMF%20Concept%20Paper_13Dec2021_posted.pdf

[16] https://www.nist.gov/system/files/documents/2022/03/17/AI-RMF-1stdraft.pdf

[17] https://www.nist.gov/news-events/events/2022/03/building-nist-ai-risk-management-framework-workshop-2

[18] https://www.nist.gov/system/files/documents/2022/08/18/AI_RMF_2nd_draft.pdf

[19] https://www.nist.gov/news-events/events/2022/10/building-nist-ai-risk-management-framework-workshop-3

[20] https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

[21] https://www.nist.gov/artificial-intelligence/ai-fundamental-research-explainability

[22] https://www.nist.gov/itl/ai-risk-management-framework/ai-risk-management-framework-faqs